Vector Search

Complete guide to implementing vector search in Tacnode using IVFFlat and HNSW indexes for efficient similarity search and retrieval.

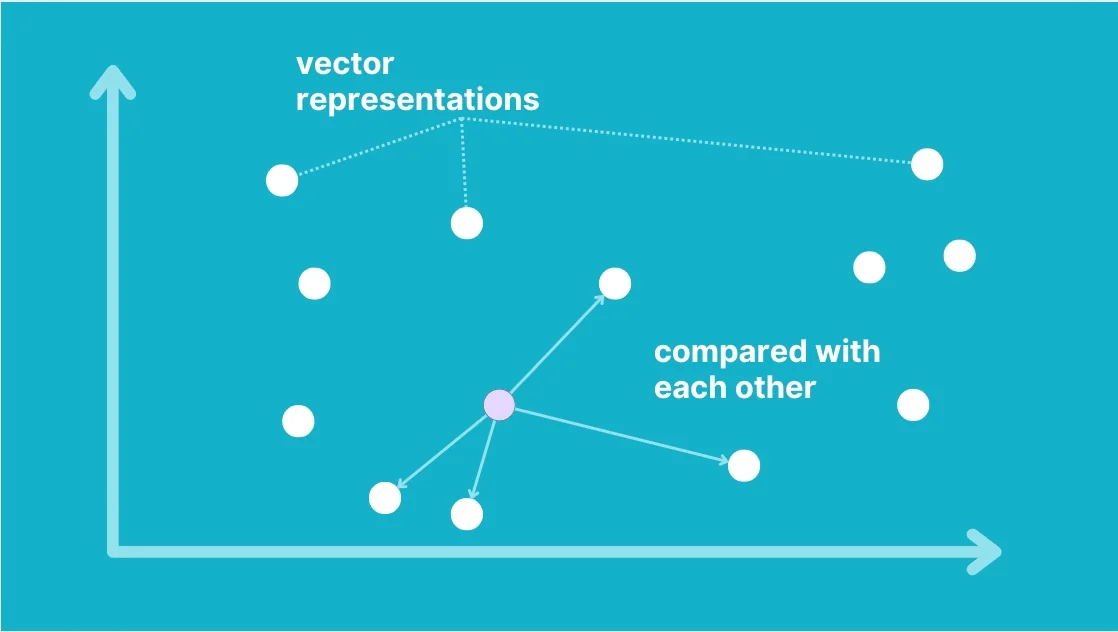

Vector search enables semantic similarity matching by converting unstructured data (text, images, audio) into high-dimensional vectors and finding similar items through mathematical distance calculations. Tacnode provides enterprise-grade vector search capabilities with PostgreSQL compatibility.

Quick Reference

| Feature | Index Type | Best For | Memory | Build Speed | Query Speed |

|---|---|---|---|---|---|

| IVFFlat | Cluster-based | Large datasets (100K+) | Low | Fast | Moderate |

| HNSW | Graph-based | High accuracy needs | High | Slow | Fast |

| Quantization | int8/fp16 | Memory optimization | 50-75% less | - | Slight overhead |

Query Methods:

- Standard indexes: Use operators (

<->,<#>,<=>) - Quantized indexes: Use functions (

l2_distance(),inner_product(),cosine_distance())

Understanding Vector Search

Artificial intelligence algorithms can convert various forms of unstructured data—such as speech, images, videos, language, text, and behaviors—into multi-dimensional vectors. These vectors act like coordinates in a mathematical space, representing different entities and their relationships.

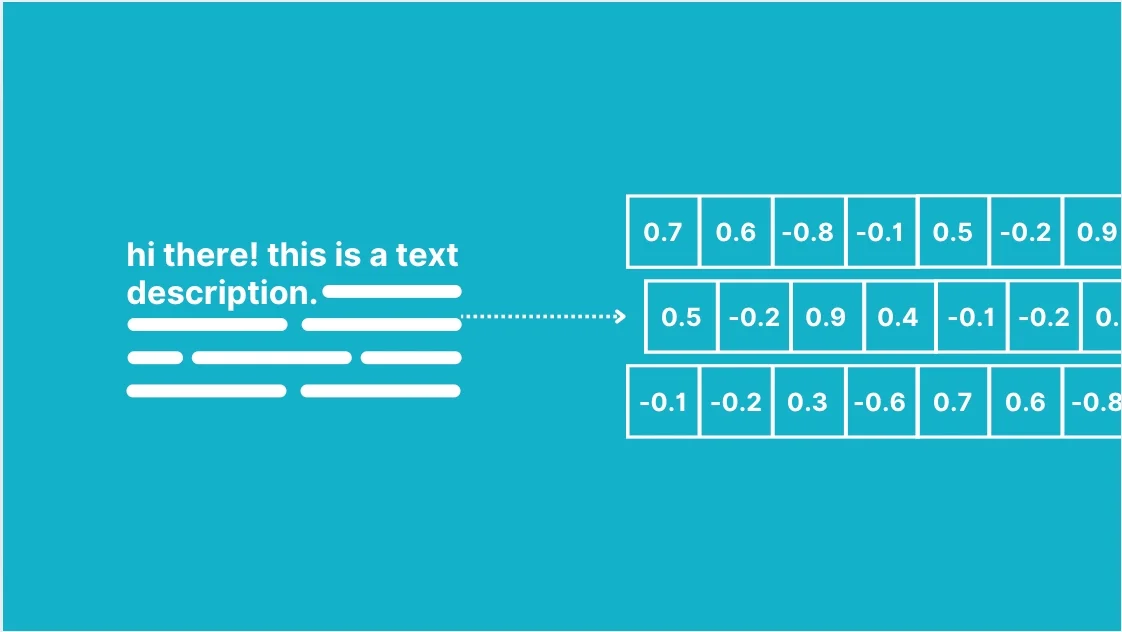

The process of transforming unstructured data into vectors is called Embedding, while retrieving these vectors to find the associated entities is known as unstructured retrieval.

From Data to Vectors

Vector embeddings transform unstructured data into numerical representations that capture semantic meaning:

-- Example: Text embeddings (conceptual)

'artificial intelligence' → [0.2, 0.8, -0.1, 0.5, ...]

'machine learning' → [0.3, 0.7, -0.2, 0.6, ...]

'deep learning' → [0.25, 0.75, -0.15, 0.55, ...]Distance Metrics

Tacnode supports three distance calculations:

| Operator | Distance Type | Use Case | Formula |

|---|---|---|---|

<-> | Euclidean (L2) | General similarity | √Σ(ai - bi)² |

<#> | Inner Product | Recommendation systems | -Σ(ai × bi) |

<=> | Cosine | Text/semantic search | 1 - (A·B)/(‖A‖‖B‖) |

Setting Up Vector Tables

Basic Table Structure

-- Create table with vector column

CREATE TABLE documents (

id BIGSERIAL PRIMARY KEY,

title TEXT,

content TEXT,

embedding VECTOR(1536), -- 1536 dimensions (e.g., OpenAI embeddings)

created_at TIMESTAMP DEFAULT NOW()

) USING COLUMNAR;

-- Sample data insertion

INSERT INTO documents (title, content, embedding) VALUES

('Introduction to AI', 'Artificial intelligence overview...', '[0.1, 0.2, 0.3, ...]'),

('Machine Learning Basics', 'ML fundamentals and concepts...', '[0.2, 0.1, 0.4, ...]'),

('Deep Learning Guide', 'Neural networks and deep learning...', '[0.15, 0.25, 0.35, ...]');Multi-Modal Example

-- Table for different data types

CREATE TABLE media_items (

id BIGSERIAL PRIMARY KEY,

item_type TEXT, -- 'text', 'image', 'audio'

title TEXT,

description TEXT,

file_path TEXT,

text_embedding VECTOR(768), -- Text embeddings

image_embedding VECTOR(512), -- Image embeddings

created_at TIMESTAMP DEFAULT NOW()

) USING COLUMNAR;Index Types and Configuration

IVFFlat Index (Cluster-Based)

Best for large datasets with balanced performance and memory usage:

-- Calculate optimal lists parameter

-- Rule: lists = rows/1000 (< 1M rows) or sqrt(rows) (> 1M rows)

-- For 100K records: lists = 100

-- For 1M records: lists = 1000

-- For 10M records: lists = 3162

-- Create IVFFlat indexes for different distance metrics

CREATE INDEX docs_embedding_l2_idx

ON documents USING SPLIT_IVFFLAT (embedding vector_l2_ops)

WITH (lists = 1000);

CREATE INDEX docs_embedding_cosine_idx

ON documents USING SPLIT_IVFFLAT (embedding vector_cosine_ops)

WITH (lists = 1000);

CREATE INDEX docs_embedding_ip_idx

ON documents USING SPLIT_IVFFLAT (embedding vector_ip_ops)

WITH (lists = 1000);HNSW Index (Graph-Based)

Best for high-accuracy requirements and fast queries:

-- Basic HNSW index

CREATE INDEX docs_embedding_hnsw_idx

ON documents USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (m = 16, ef_construction = 64);

-- High-accuracy configuration (slower build, better recall)

CREATE INDEX docs_embedding_hnsw_accurate_idx

ON documents USING SPLIT_HNSW (embedding vector_cosine_ops)

WITH (m = 32, ef_construction = 128);

-- Memory-optimized with quantization

CREATE INDEX docs_embedding_hnsw_compact_idx

ON documents USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (m = 16, ef_construction = 64, quantizer = 'fp16');Quantization Options

Reduce memory usage with minimal accuracy loss:

-- fp16 quantization (50% memory reduction)

CREATE INDEX docs_fp16_idx

ON documents USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (quantizer = 'fp16');

-- int8 quantization (75% memory reduction)

CREATE INDEX docs_int8_idx

ON documents USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (quantizer = 'int8');Performing Vector Searches

Basic Similarity Search

-- Find documents similar to a query vector

SELECT

id,

title,

embedding <-> '[0.1, 0.2, 0.3, ...]' AS distance

FROM documents

ORDER BY embedding <-> '[0.1, 0.2, 0.3, ...]'

LIMIT 10;

-- Cosine similarity search

SELECT

id,

title,

1 - (embedding <=> '[0.1, 0.2, 0.3, ...]') AS similarity

FROM documents

ORDER BY embedding <=> '[0.1, 0.2, 0.3, ...]'

LIMIT 10;Search with Filtering

-- Vector search with metadata filtering

SELECT

id,

title,

content,

embedding <-> '[0.1, 0.2, 0.3, ...]' AS distance

FROM documents

WHERE created_at > '2024-01-01'

AND title ILIKE '%machine learning%'

ORDER BY embedding <-> '[0.1, 0.2, 0.3, ...]'

LIMIT 5;Multi-Vector Search

-- Search across different embedding types

SELECT

id,

title,

LEAST(

text_embedding <-> '[0.1, 0.2, ...]',

image_embedding <-> '[0.3, 0.4, ...]'

) AS min_distance

FROM media_items

ORDER BY min_distance

LIMIT 10;Query Optimization

IVFFlat Configuration

-- Configure probe count for IVFFlat searches

-- Higher probes = better recall, slower queries

-- Lower probes = faster queries, potentially missed results

-- Session-level setting

SET ivfflat.probes = 10;

-- System-wide setting

ALTER SYSTEM SET ivfflat.probes = 10;

SELECT pg_reload_conf();

-- Adaptive probing based on dataset size

-- Small dataset (< 100K): probes = lists / 10

-- Medium dataset (100K-1M): probes = lists / 20

-- Large dataset (> 1M): probes = lists / 50HNSW Configuration

-- Configure search width for HNSW

-- Higher ef_search = better recall, higher latency

SET hnsw.ef_search = 100;

-- Performance vs accuracy trade-offs:

-- ef_search = 40: Fast queries, moderate recall

-- ef_search = 100: Balanced performance

-- ef_search = 200: High recall, slower queriesQuantized Index Query Methods

When using quantized indexes (int8 or fp16), queries must use specialized functions instead of distance operators. This is because quantized vectors require specific distance calculation methods:

Quantized Index Distance Functions:

l2_distance(vector, vector)- Calculate Euclidean distance (L2 Distance)inner_product(vector, vector)- Calculate inner product distance (Inner Product)cosine_distance(vector, vector)- Calculate cosine distance (Cosine Distance)

Important: When using quantized indexes, you must use the corresponding functions for queries. The standard operators (<->, <#>, <=>) will not work with quantized indexes.

-- Configure search parameters for quantized HNSW indexes

SET hnsw.ef_search = 100;

-- Query using l2_distance for quantized indexes

SELECT

id,

title,

l2_distance(embedding, '[3,1,2]') AS distance

FROM documents

ORDER BY l2_distance(embedding, '[3,1,2]')

LIMIT 5;

-- Query using inner_product for quantized indexes

SELECT

id,

title,

inner_product(embedding, '[3,1,2]') AS ip_distance

FROM documents

ORDER BY inner_product(embedding, '[3,1,2]')

LIMIT 5;

-- Query using cosine_distance for quantized indexes

SELECT

id,

title,

cosine_distance(embedding, '[3,1,2]') AS cosine_dist

FROM documents

ORDER BY cosine_distance(embedding, '[3,1,2]')

LIMIT 5;Quantized vs Non-Quantized Query Comparison:

-- Non-quantized index queries (using operators)

SELECT * FROM documents

ORDER BY embedding <-> '[3,1,2]' LIMIT 5; -- L2 distance

SELECT * FROM documents

ORDER BY embedding <#> '[3,1,2]' LIMIT 5; -- Inner product

SELECT * FROM documents

ORDER BY embedding <=> '[3,1,2]' LIMIT 5; -- Cosine distance

-- Quantized index queries (using functions)

SELECT * FROM documents

ORDER BY l2_distance(embedding, '[3,1,2]') LIMIT 5; -- L2 distance

SELECT * FROM documents

ORDER BY inner_product(embedding, '[3,1,2]') LIMIT 5; -- Inner product

SELECT * FROM documents

ORDER BY cosine_distance(embedding, '[3,1,2]') LIMIT 5; -- Cosine distancePerformance Monitoring

-- Check index usage and performance

EXPLAIN (ANALYZE, BUFFERS)

SELECT id, title

FROM documents

ORDER BY embedding <-> '[0.1, 0.2, 0.3, ...]'

LIMIT 10;

-- Monitor index statistics

SELECT

schemaname,

tablename,

indexname,

idx_scan,

idx_tup_read,

idx_tup_fetch

FROM pg_stat_user_indexes

WHERE indexname LIKE '%embedding%';Advanced Use Cases

Semantic Search Application

-- Complete semantic search with ranking

WITH query_vector AS (

SELECT '[0.1, 0.2, 0.3, ...]'::vector AS qv

),

search_results AS (

SELECT

d.id,

d.title,

d.content,

d.embedding <=> q.qv AS cosine_distance,

1 - (d.embedding <=> q.qv) AS similarity_score

FROM documents d, query_vector q

WHERE d.embedding <=> q.qv < 0.5 -- Distance threshold

ORDER BY d.embedding <=> q.qv

LIMIT 20

)

SELECT

title,

SUBSTRING(content, 1, 200) || '...' AS snippet,

ROUND(similarity_score::numeric, 3) AS score

FROM search_results

WHERE similarity_score > 0.7 -- Similarity threshold

ORDER BY similarity_score DESC;Recommendation System

-- Find similar items for recommendation

CREATE OR REPLACE FUNCTION get_recommendations(

user_item_id BIGINT,

max_results INTEGER DEFAULT 10

) RETURNS TABLE(

item_id BIGINT,

title TEXT,

similarity_score NUMERIC

) AS $$

BEGIN

RETURN QUERY

SELECT

d.id,

d.title,

ROUND((1 - (d.embedding <=> ref.embedding))::numeric, 3)

FROM documents d

CROSS JOIN (

SELECT embedding

FROM documents

WHERE id = user_item_id

) ref

WHERE d.id != user_item_id

ORDER BY d.embedding <=> ref.embedding

LIMIT max_results;

END;

$$ LANGUAGE plpgsql;

-- Usage

SELECT * FROM get_recommendations(123, 5);Hybrid Search (Vector + Text)

-- Combine vector similarity with text search

WITH vector_results AS (

SELECT

id,

title,

embedding <=> '[0.1, 0.2, ...]' AS vector_distance,

ROW_NUMBER() OVER (ORDER BY embedding <=> '[0.1, 0.2, ...]') AS vector_rank

FROM documents

ORDER BY embedding <=> '[0.1, 0.2, ...]'

LIMIT 50

),

text_results AS (

SELECT

id,

title,

ts_rank(to_tsvector('english', title || ' ' || content),

plainto_tsquery('english', 'machine learning')) AS text_score,

ROW_NUMBER() OVER (ORDER BY ts_rank(to_tsvector('english', title || ' ' || content),

plainto_tsquery('english', 'machine learning')) DESC) AS text_rank

FROM documents

WHERE to_tsvector('english', title || ' ' || content) @@

plainto_tsquery('english', 'machine learning')

LIMIT 50

)

SELECT

COALESCE(v.id, t.id) AS id,

COALESCE(v.title, t.title) AS title,

COALESCE(v.vector_distance, 1.0) AS vector_distance,

COALESCE(t.text_score, 0.0) AS text_score,

-- Hybrid score: combine vector and text relevance

(0.7 * (1 - COALESCE(v.vector_distance, 1.0)) + 0.3 * COALESCE(t.text_score, 0.0)) AS hybrid_score

FROM vector_results v

FULL OUTER JOIN text_results t ON v.id = t.id

ORDER BY hybrid_score DESC

LIMIT 10;Memory-Optimized Quantized Search

For memory-constrained environments, quantized indexes provide significant space savings:

-- Create memory-optimized table with quantized indexes

CREATE TABLE large_corpus (

id BIGSERIAL PRIMARY KEY,

document_text TEXT,

embedding VECTOR(1536)

) USING COLUMNAR;

-- Create int8 quantized index (75% memory reduction)

CREATE INDEX large_corpus_int8_idx

ON large_corpus USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (quantizer = 'int8', m = 16, ef_construction = 64);

-- Create fp16 quantized index (50% memory reduction)

CREATE INDEX large_corpus_fp16_idx

ON large_corpus USING SPLIT_HNSW (embedding vector_cosine_ops)

WITH (quantizer = 'fp16', m = 16, ef_construction = 64);

-- Query using quantized index functions

WITH query_embedding AS (

SELECT '[0.1, 0.2, 0.3, ...]'::vector AS qe

),

quantized_results AS (

SELECT

id,

document_text,

l2_distance(embedding, q.qe) AS l2_dist,

cosine_distance(embedding, q.qe) AS cosine_dist,

-- Combine different distance metrics for better ranking

(l2_distance(embedding, q.qe) * 0.6 + cosine_distance(embedding, q.qe) * 0.4) AS combined_score

FROM large_corpus lc, query_embedding q

ORDER BY combined_score

LIMIT 20

)

SELECT

id,

LEFT(document_text, 100) || '...' AS snippet,

ROUND(l2_dist::numeric, 4) AS l2_distance,

ROUND(cosine_dist::numeric, 4) AS cosine_distance,

ROUND(combined_score::numeric, 4) AS final_score

FROM quantized_results

ORDER BY final_score;Best Practices

Index Selection Guidelines

IVFFlat Index - Cluster-Based:

- Best for: Large datasets (> 100K vectors), memory-constrained environments

- Lists parameter:

- < 1M rows:

lists = rows/1000 - > 1M rows:

lists = sqrt(rows)

- < 1M rows:

- Probes tuning: Start with

lists/20, adjust based on performance needs - Trade-offs: Lower memory usage, faster build time, moderate query performance

HNSW Index - Graph-Based:

- Best for: High accuracy requirements, fast query performance (< 10M vectors)

- Parameters:

m: 16 (balanced), 32 (high accuracy), 8 (fast build)ef_construction: 64 (default), 128+ (better quality)

- Trade-offs: Higher memory usage, slower build time, excellent query performance

Memory Optimization Strategies:

- Vector Quantization: Use

fp16(50% reduction) orint8(75% reduction) - Dimension Reduction: Reduce vector dimensions if possible during embedding

- Index Type Selection: Use IVFFlat for very large datasets (> 10M vectors)

- Data Archiving: Implement vector archiving for historical data

- Batch Operations: Process embeddings in batches to reduce memory peaks

Quantization Selection Guide:

| Quantization Type | Memory Reduction | Precision Loss | Query Method | Best For |

|---|---|---|---|---|

| None (float32) | 0% | None | Operators (<->, <#>, <=>) | High precision needs |

| fp16 | 50% | Minimal | Functions (l2_distance(), etc.) | Balanced memory/precision |

| int8 | 75% | Moderate | Functions (l2_distance(), etc.) | Memory-constrained environments |

-- Example: Choosing quantization based on requirements

-- High precision scenario (no quantization)

CREATE INDEX high_precision_idx

ON documents USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (m = 32, ef_construction = 128);

-- Query with operators

SELECT * FROM documents

ORDER BY embedding <-> '[0.1, 0.2, ...]' LIMIT 10;

-- Balanced scenario (fp16 quantization)

CREATE INDEX balanced_idx

ON documents USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (quantizer = 'fp16', m = 16, ef_construction = 64);

-- Query with functions

SELECT * FROM documents

ORDER BY l2_distance(embedding, '[0.1, 0.2, ...]') LIMIT 10;

-- Memory-constrained scenario (int8 quantization)

CREATE INDEX memory_optimized_idx

ON documents USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (quantizer = 'int8', m = 16, ef_construction = 64);

-- Query with functions

SELECT * FROM documents

ORDER BY l2_distance(embedding, '[0.1, 0.2, ...]') LIMIT 10;Production Deployment Tips

- Index Building: Build indexes during low-traffic periods

- Monitoring: Track query performance and recall metrics

- Scaling: Use read replicas for query load distribution

- Backup: Include vector indexes in backup strategy

- Updates: Consider rebuild vs. incremental updates for large changes

Common Pitfalls to Avoid

- Wrong distance metric: Match metric to your embedding model

- Inappropriate index params: Test different configurations

- Missing normalization: Ensure vectors are properly normalized

- Ignoring data distribution: Consider vector clustering patterns

- Over-indexing: Don’t create unnecessary indexes

- Quantized index query errors: Use functions (

l2_distance(),inner_product(),cosine_distance()) instead of operators (<->,<#>,<=>) for quantized indexes

Critical Error Example:

-- ❌ WRONG: Using operators with quantized indexes

CREATE INDEX quantized_idx ON docs USING SPLIT_HNSW (embedding vector_l2_ops)

WITH (quantizer = 'int8');

-- This will NOT work correctly

SELECT * FROM docs ORDER BY embedding <-> '[1,2,3]' LIMIT 5;

-- ✅ CORRECT: Using functions with quantized indexes

SELECT * FROM docs ORDER BY l2_distance(embedding, '[1,2,3]') LIMIT 5;This comprehensive guide covers all aspects of vector search in Tacnode, from basic setup to advanced hybrid search implementations.